Converting Categorical Variables for Machine Learning: One-Hot Encoding and Label Encoding Techniques

Data Preprocessing

When dealing with categorical variables in your dataset, consider using one-hot encoding or label encoding to convert them into a numerical format that machine learning models can understand. You can apply one-hot encoding efficiently using libraries like pandas to create binary columns for each category.

By using pd.get_dummies, you can efficiently one-hot encode multiple categorical columns simultaneously, which is particularly useful when working with complex datasets. This technique transforms categorical data into a format suitable for machine learning models.

import pandas as pd

# Example dataset with multiple categorical columns

data = pd.DataFrame({

'Color': ['Red', 'Blue', 'Green', 'Red', 'Green'],

'Size': ['Small', 'Medium', 'Large', 'Small', 'Large']

})

# Apply one-hot encoding to all categorical columns

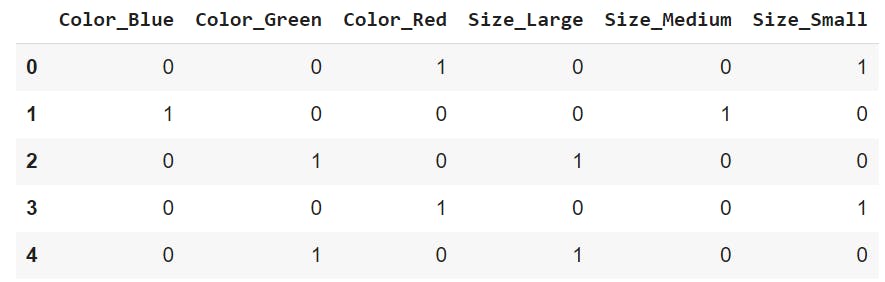

data_encoded = pd.get_dummies(data, columns=['Color', 'Size'])

The resulting DataFrame will have binary columns for each category

Output

Use label encoding for ordinal categorical variables (categories with a meaningful order). Categories are assigned integer labels.

from sklearn.preprocessing import LabelEncoder

# Example dataset with an ordinal column 'Size'

data = pd.DataFrame({'Size': ['Small', 'Medium', 'Large', 'Small', 'Large']})

# Apply label encoding

label_encoder = LabelEncoder()

data['Size_encoded'] = label_encoder.fit_transform(data['Size'])

Output

Label encoding assigns a unique integer to each category based on their order. It's particularly useful for ordinal data, such as sizes (Small < Medium < Large), where the order matters. However, be cautious when using label encoding for nominal variables (categories with no order) as it may introduce unintended relationships in your data.

Choose the encoding method based on the nature of your categorical data. Proper encoding is essential for accurate model training. #DataPreprocessing #FeatureEngineering"